Tesla Faults Brakes, but Not Autopilot, in Fatal Crash

【Summary】Tesla Motors has told Senate investigators that its crash-prevention system failed to work properly in a fatal crash, but said its Autopilot technology was not at fault, according to a Senate staff member.

Tesla Motors has told Senate investigators that its crash-prevention system failed to work properly in a fatal crash, but said its Autopilot technology was not at fault, according to a Senate staff member.

Instead, Tesla told members of the Senate Commerce Committee staff on Thursday that the problem involved the car's automatic braking system, said the staff member, who spoke on condition of anonymity.

It was not clear how or why Tesla considers the automatic braking system to be separate from Autopilot, which combines automated steering, adaptive cruise control and other features meant to avoid accidents. Tesla declined to comment on Friday.

"Those systems are supposed to work together to prevent an accident," said Karl Brauer, a senior analyst at Kelley Blue Book, an auto research firm. "But either the car didn't know it had to stop, or it did know and wasn't able to stop. That involves Autopilot and the automatic braking."

The company told the committee staff that it considered the braking systems as "separate and distinct" from Autopilot, which manages the car's steering, and can change lanes and adjust travel speed, the staff member said.

That argument is consistent with the company's continued resistance to critics' calls for the company to disable Autopilot. Tesla's chief executive,Elon Musk, and other company officials have continued to defend Autopilot, describing it as a lifesaving technology.

The meeting on Thursday, which involved Tesla engineering executives, was in response to a request from the committee for more information about Autopilot and the cause of the crash in Florida.

The executives also urged against governmental action that could slow introduction of automated-driving technology, according to the staff member. Tesla expressed the view that while some deaths might occur as automakers developed and perfected these kinds of innovations, the safety benefits outweighed the risks, this person said.

Tesla is still trying to determine whether the car's radar and camera systems failed to detect a tractor-trailer that was crossing the roadway, or whether they saw the truck but misidentified it as an overpass or overhead road sign.

In a Twitter posting last month, Tesla's chief noted that the radar system "tunes out" readings that appear to be overhead signs to prevent the car from braking unnecessarily.

The information presented at the meeting is the most extensive explanation Tesla has given so far for what role its Autopilot system might have played in the crash, which took the life of Joshua Brown, 40, an entrepreneur from Ohio.

Mr. Brown was traveling on a divided highway in Williston, Fla., with Autopilot controlling his Tesla Model S when it crashed into a tractor-trailer rig that had made a left turn in front of the car.

The company has previously said that neither the car's Autopilot system nor Mr. Brown activated the brakes before the impact and noted that the radar and camera systems might have failed to detect the white truck against a bright sky.

The reliability of Autopilot is a sensitive matter for Tesla because the company tries to present itself to customers and investors as a technology company rather than an automaker, said Mr. Brauer, the Kelley Blue Book analyst.

"Autopilot is a key component of the image Tesla wants to project," he said. "They've made a big deal about it. So they don't want to be in the position of saying that there's something wrong with something that's part of the whole company's image."

Tesla was also due on Friday to provide the first batch of information requested by the National Highway Traffic Safety Administration, which is investigating whether a safety defect exists in Autopilot or other safety systems. Tesla declined to say whether it would meet the Friday deadline.

The National Transportation Safety Board, which typically examines airplane and train accidents, is also investigating. It has found the car was traveling at 74 miles per hour, nine more than the speed limit.

Tesla is scheduled to report its second-quarter financial results on Wednesday. Analysts expect a loss, as the company spends heavily to increase production of a new Model X sport utility vehicle, finish the development of a more affordable Model 3 compact and start production at the gigantic battery factory that it is opening in Nevada.

It is unclear whether the fatal crash and questions about the Autopilot technology are hurting Tesla's sales or reputation. It delivered 14,370 cars in the second quarter, fewer than expected, because 5,150 cars were still in transit to customers, the company said. A year ago, it delivered 11,507 cars in the second quarter.

A repercussion of the Florida crash is that Mobileye, the Israeli provider of image-processing technology used in Autopilot, is no longer supplying Tesla because of disagreements over how the system is being used.

Consumer Reports magazine also publicly called on Tesla to disable the Autopilot's automatic steering function until it can find a way to make sure drivers keep their hands on the steering wheel while using it.

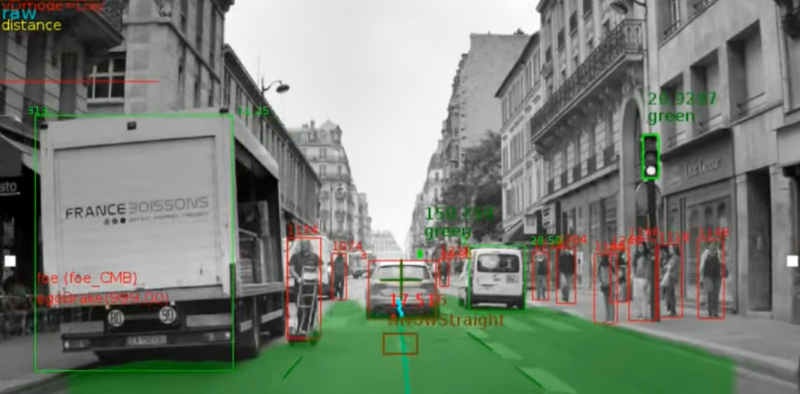

Autopilot consists of a series of safety components that can steer, brake, overtake other vehicles and maintain lane position automatically with little input from the driver. The system warns drivers to keep their hands on the steering wheel and remain alert. But many drivers, Mr. Brown included, have posted videos on YouTube showing it is possible to go several minutes without looking at the road or holding the wheel.

resource from: New York Times

-

Elon Musk confident that Tesla can attain staggering 20-fold increase in production speed in Fremont

-

Tesla responds to Mobileye’s comments on Autopilot, confirms new in-house ‘Tesla Vision’ product

-

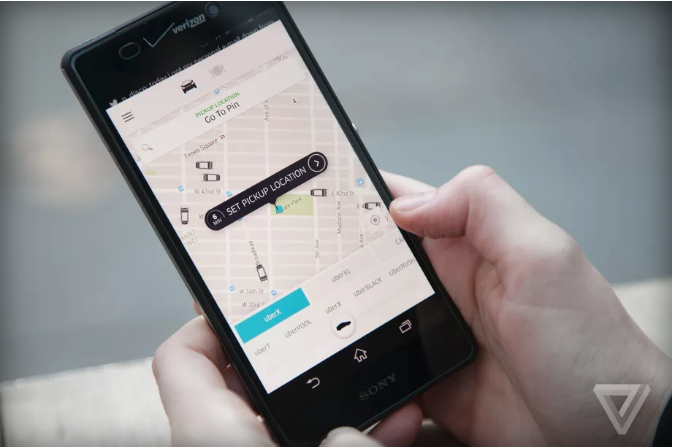

Uber will repay thousands of riders for misleading them about tips

-

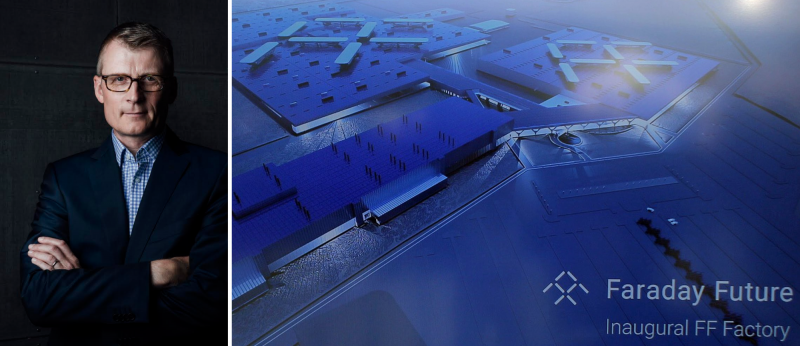

Faraday Future adds top VW executive to bring to market its upcoming electric vehicles

-

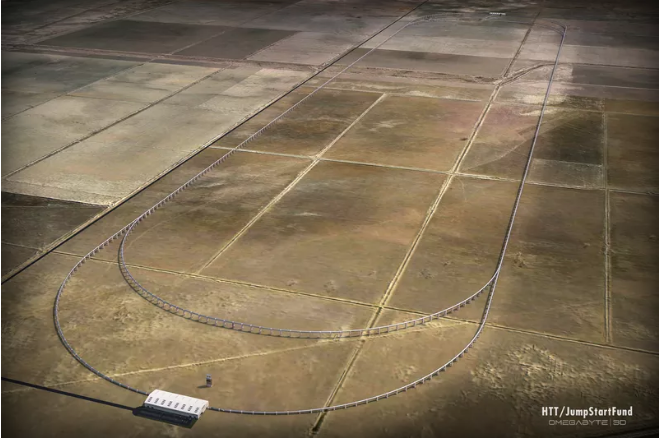

Hyperloop track construction in California is delayed

-

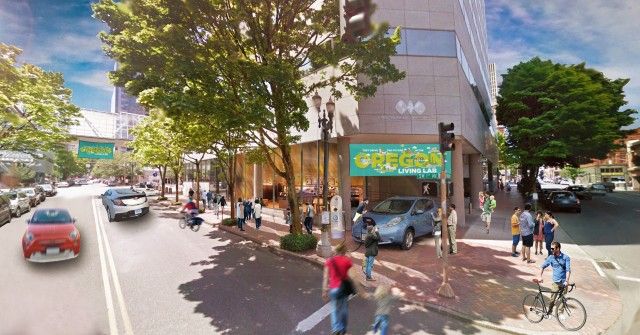

Federal funds to back Portland storefront for electric-car marketing, pop-up roadshows

-

Tesla announces a partnership in South Korea for first store and 25 charging stations

-

DOE small biz voucher awards include vehicles and fuel cells

- California’s Electric Vehicle Sales for Q1-Q3 2022 Show That Tesla is Facing Growing Competition

- 2023 Jeep Grand Cherokee Trailhawk Now PHEV Only

- Solid State Battery Startup Solid Power Completes its EV Cell Pilot Production Line

- Hyundai Unveils the IONIQ 6 ‘Electric Streamliner’, its Bold Sedan With a 380-Mile Range and 18-Minute Charging Time

- Ralph Nader Calls for NHTSA to Remove Tesla Full Self-Driving

- EV Startup VinFast is Offering 3 Years of Free EV Charging and Advanced Driver Assist System for Customers That Reserve a Vehicle Through Sept 30

- US-listed LiDAR Developers Velodyne and Ouster to Merge in an All-Stock Deal

- Hyundai Gets Serious About Electric Performance Cars, Shows off Two Concepts

- Qualcomm and its Industry Partners Demonstrate C-V2X Technology in Georgia That Ensures School Buses and Fire Trucks Never Get Stuck at Red Lights

- Apple Reveals the Next-Gen Version of CarPlay, Which Takes Over a Vehicle’s Entire Dashboard and Screens

About Us

About Us Contact Us

Contact Us Careers

Careers