Sensors give smart cars a sixth sense

【Summary】NXP Automotive CTO Lars Reger, told delegates at NXP’s ‘FTF Connects’ conference in San Jose in June 2017, “An autonomous car can only be as good as its environmental sensing.” Inferring that cars will need human-like senses to contextually perceive and understand the world around them.

In 2016 then-President Obama told the American nation that automated vehicles (AVs) could cut the yearly road death toll by tens of thousands, quoting NHTSA's claims that the technology could potentially avert or mitigate 19 of every 20 crashes on the road. The message was clear: Robots will be better drivers than humans.

But, as NXP Automotive CTO Lars Reger, told delegates at NXP's ‘FTF Connects' conference in San Jose in June 2017, "An autonomous car can only be as good as its environmental sensing." Inferring that cars will need human-like senses to contextually perceive and understand the world around them.

Image Source: medium.com

Sensors that replicate human senses

For self-driving cars to be accepted by consumers they will need to replicate human cognitive behavior which is made up of a three-part process:

1) Perception of the environment

2) Decision making based on this perception of the surroundings

3) Timely and accurate implementation the decision

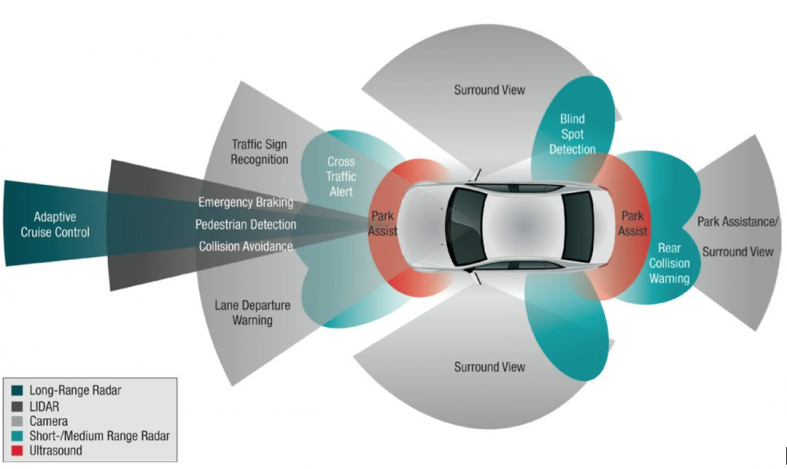

In the automated vehicle, perception is formulated by sensors such as cameras (including heat-detecting infrared cameras), radar, lidar, and ultra-sonic; while decisions are made by artificial intelligence (AI), algorithms and processing, and the control of the vehicle is handled by actuators.

The sensors used to create the perception can further be broken down into two categories:

Proprioceptive Sensors – responsible for measuring the vehicle's internal functions such as wheel speed, inertial measurement and driver attentiveness

Exteroceptive Sensors – responsible for sensing the vehicle's surroundings

The exteroceptive sensors are of particular importance to autonomous functionality as they sense the external environment. In so doing it is critical that these devices identify all important objects on or near the road; accurately categorizing other vehicles, pedestrians, debris and, in some cases, road features like signs and lane markings.

These exteroceptive sensors, due to their strengths and weaknesses, all have specific roles to play in safely guiding the AV:

360O and Flash Lidars have high resolution but are not effective in heavy snow and rain

Radar can detect objects at greater distances, regardless of the weather, but only at low resolution

Ultrasonic sensors are well suited to judging a car's distance to objects, but only at short range

Cameras, on the other hand, lead the way in object classification and texture interpretation. By far the cheapest and most widely used sensors, cameras however generate huge amounts of data, and also depend on good visibility

Because visual systems using cameras are extremely good at interpreting the environment most sensor arrays rely very heavily on their input; often using convolutional neural networks (CNN) that are computationally efficient at analyzing images, to interpret the recordings. CNNs consist of sets of algorithms that extract meaningful information from sensor input, thereby accurately identifying objects such as cars, people, animals, road signs, road junctions, kerb, and road markings. This enables the vehicle to ascertain the ‘relevant reality' of the scene.

The CNN network is made up of many ‘layers' which break down image recognition into specific tasks – from finding edges, corners and circles, to identifying them as road-signs, to reading what the road sign says.

Applying machine learning to these networks, requires significant datasets to train the CNN to identify the various objects. However, once the system has been trained it's possible for the vehicle to accomplish tasks through inference. This enables the network to accurately interpret situations in real time, based on its trained ‘knowledge' of images.

For example, if a car ahead suddenly applies brakes (outside the reaction time of the driver) an automated car following would instantly verify the distance, sense the speed and apply the brakes faster than any human would be able to do.

However, for this function to operate safely the cameras would need good visibility, which may not always be available. Therefore self-driving vehicles rely on the input from different types of sensors to best interpret the situation in all conditions.

For that reason it's common practice to fuse sensors such as radar and cameras: A camera that works in the visible spectrum has problems in poor visibility conditions like rain, dense fog, sun glare, and absence of light, but has high reliability when recognizing colors (for example, road markings). Radar, even at low resolution, is useful for detecting objects at a distance and is not sensitive to environmental conditions. The two-stage fusion of a mm-wave radar and a monocular camera increases reliability in vehicle detection and overall robustness of the relative positioning system, and is capable of achieving a 92% detection rate with no false alarms.

Accordingly, sensor fusion which blends information from various sensors, to provide a clearer view of the vehicles' environment, is a prerequisite for reliable, safe and effective autonomous driving systems.

Which sensors to believe?

In higher levels of automation the data gathered from the sensors is used to form a contextual picture of the car's environment; which is only possible with multi-sensor data fusion. Instead of each system independently performing its own warning or control function in the car, in a fused-system the final decision on what action to take is made centrally by a single controller.

Although there are many advantages of multiple-sensor fusion, the primary benefits are fewer false positives or negatives.

Any one of the sensors could report a false positive or a false negative if operating under conditions that do not meet the device's design parameters, so it is up to the AI of the self-driving car to eliminate these occurances.

The AI will typically canvas all the sensors to try and determine whether any one unit is transmitting incorrect data. Some AI systems will judge which sensor is right by pre-determining that some of the sensors are better than the others, or it might do a voting protocol wherein if X sensors vote that something is there and Y do not, and if X > Y by some majority, the decision is carried.

Another popular method is known as the Random Sample Consensus (RANSAC) approach. The RANSAC algorithm is a learning technique to estimate parameters of a model by random sampling of observed data. Given a dataset whose data elements contain both inliers and outliers, RANSAC uses the voting scheme to find the best-fit result.

Smart sensor-fusion combinations

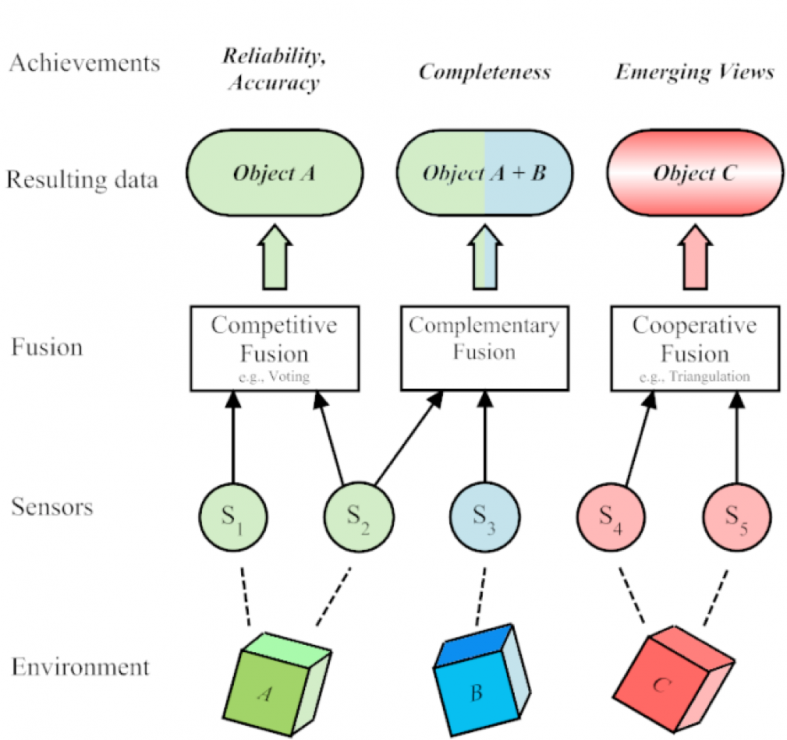

Furthermore, depending on the sensor configuration, sensor fusion can be performed in complementary, competitive, or cooperative combinations:

Image Source: Networking Embedded Systems

A sensor fusion combination is called complementary if the sensors do not directly depend on each other, but can be combined in order to give a more complete image of the environment, providing a spatially or temporally extended view. Generally, fusing complementary data is easy, since the data from independent sensors can be added to each other, but the disadvantage is that under certain conditions the sensors may be ineffective, such as when cameras are used in poor visibility.

An example of a complementary configuration is the employment of multiple cameras each focused on different areas of the car's surroundings to build up a picture of the environment.

Competitive sensor fusion combinations are used for fault-tolerant and robust systems. Sensors in a competitive configuration have each sensor delivering independent measurements of the same target and can also provide robustness to a system by combining redundant information.

There are two possible competitive combinations – the fusion of data from different sensors or the fusion of measurements from a single sensor taken at different instants.

A special case of competitive sensor fusion is fault tolerance. Fault tolerance requires an exact specification of the service and the failure modes of the system. In case of a fault covered by the fault hypothesis, the system still has to provide its specified service

In contrast to fault tolerance, competitive configurations provide robustness to a system by delivering a degraded level of service in the presence of faults. While this graceful degradation is weaker than the achievement of fault tolerance, the respective algorithms perform better in terms of resource needs and work well with heterogeneous data sources.

An example would be the reduction of noise by combining two overlaying camera images.

A cooperative sensor network uses the information provided by two independent sensors to derive information that would not be available from the single sensors.

A Cooperative fusion combination provides an emerging view of the environment by combining non-redundant information, however the result generally is sensitive to inaccuracies in all participating sensors.

Because the data produced is sensitive to inaccuracies present in individual sensors, cooperative sensor fusion is probably the most difficult to design. Thus, in contrast to competitive fusion, cooperative sensor fusion generally decreases accuracy and reliability.

An example of a cooperative sensor configuration can be found when stereoscopic vision creates a three-dimensional image by combining two-dimensional images from two cameras at slightly different viewpoints.

To sum up the primary differences in sensor fusion combinations: Competitive fusion combinations increase the robustness of the perception, while cooperative and complementary fusion provide extended and more complete views. Which algorithms are particularly used in the fusion/dissemination level depends on the available resouces like processing power, working memory, and on application-specific needs at the control level.

However, these three combinations of sensor fusion are not mutually exclusive.

Many applications implement aspects of more than one of the three types. An example for such a hybrid architecture is the application of multiple cameras that monitor a given area. In regions covered by two or more cameras the sensor configuration can be competitive or cooperative. For regions observed by only one camera the sensor configuration is complementary.

So, after more than a hundred years of honing the five human senses to pilot the modern motor car, we've come to the realization that the automated vehicle with its intelligently fused sensor array may in actual fact introduce a sixth sense into the equation.

-

FEATURED COVERAGE: Testing Autonomous Vehicles to Save Lives in the Wake of Recent Accidents

-

SPECIAL FEATURE: 5G Will Revolutionize Cars and the Networks That Support Them

-

SPECIAL FEATURE: Unraveling the DNA of the Car of the Future, Part Three

-

SPECIAL FEATURE: Unraveling the DNA of the Car of the Future, Part Two

-

The give and take of EV charging

-

Is China’s NEV Program a Step Too Far?

-

48 Volt: Life-Support for the Internal Combustion Engine

-

Is 2170 Tesla’s Magic Number?

- Ford is Testing a New Robotic Charging Station to Assist Drivers of EVs With Disabilities

- High Gas Prices Aren’t Enough to Sway Consumers to EVs, Autolist Survey Finds

- Hyundai and Michelin to Develop Next-Gen Tires for EVs

- Tesla Rival XPeng and Alibaba Cloud Set Up China’s Largest Cloud-Based Computing Center to Train Machine Learning Models for Autonomous Driving

- Electric Hypercar Developer Rimac Raises $500 Million Euro in Series D Round, Investors Include Porsche, Softbank and Goldman Sachs

- NHTSA Opens Investigations Into Two New Fatal Tesla Accidents

- Hyundai Gets Serious About Electric Performance Cars, Shows off Two Concepts

- Volvo is Building a New EV Service and Training Facility at its U.S. Headquarters in New Jersey To Fast Track its Electrification Plans

- Stellantis Acquires Autonomous Driving Software Startup aiMotive

- Qualcomm Ventures Invests in ThunderX, a Company Developing a Domain Controller-based Intelligent Vehicle Compute Platform

About Us

About Us Contact Us

Contact Us Careers

Careers