Waymo Updates its Open Dataset for Autonomous Vehicles to Help Researchers Better Predict the Behavior of other Road Users

【Summary】Waymo, the self-driving subsidiary of Alphabet Inc. that spun out of Google’s self-driving car project, is adding prediction data to its massive Open Dataset which third parties can use for making their self-driving systems safer. Waymo first released its Open Dataset in 2019. The data was collected from its fleet of self-driving vehicles, which have traveled over 20 million miles.

One of the biggest challenges for developers of self-driving vehicles is predicting the actions of other road users. In order to safely navigate in an urban environment, self-driving vehicles need a high level of awareness, as well as what actions other vehicles, pedestrians and bicyclists might do next.

For human drivers, these predictions are based on the "rules of the road", such as which driver has the right of way in a traffic-controlled intersection.

While autonomous vehicles are programmed to obey the rules of the road and all other traffic laws, human drivers often break these rules, which makes it more difficult to program autonomous vehicles that might one day share the road alongside human drivers.

Waymo is addressing this problem and announced new updates to its Open Dataset for self-driving vehicles.

The self-driving subsidiary of Alphabet Inc. that spun out of Google's self-driving car project is adding prediction data to its Open Dataset that third parties can use for making their self-driving systems better and safer. For a self-driving vehicle, correctly predicting the behavior of other road users is essential in order to mitigate crashes.

Waymo's Open Dataset will now include a "motion dataset", which is the largest interactive dataset ever released for research, the company says. The data includes behavior prediction and motion forecasting for autonomous driving.

Datasets like Waymo's are used to train machine learning models. With the data, machine learning algorithms can be used for object detection, identification, localization and behavior prediction, all of which are performed by an autonomous vehicle's software.

Waymo is also sharing a paper describing the state-of-the-art research offboard perception method its uses to annotate the motion dataset, so any research team can replicate the same techniques that Waymo employs to create their own high-quality motion data. Waymo said it wanted to make the process for creating the new motion dataset as transparent as possible for others.

The paper has been accepted at the annual IEEE International Conference on Computer Vision and Pattern Recognition (CVPR 2021).

One of the reasons that this type of data is so valuable is that it's difficult to obtain. Gathering high-quality motion data is a time-consuming and expensive process. But Waymo has done much of the work already. To date, Waymo's fleet of self-driving vehicles has traveled over 20 million miles on public roads, collecting valuable data along the way from an entire suite of sensors.

In addition, generating a motion dataset with high-quality labels requires a sophisticated perception system that can accurately pick out agents and objects from camera and lidar data, then track their movements throughout a scene, according to Waymo.

Interesting or unusual motion data is even harder to gather. Most everyday driving is rather uneventful, which makes for uninformative data if a researcher is building a system to predict what could happen on the road in unusual situations. As a result, existing datasets often don't have many interesting interactions, which are known in the field as "edge cases."

An example of an edge case might be a driver that suddenly crosses over three lanes from the left lane to take an exit. A human driver may not see this too often, but an autonomous vehicle might encounter a situation like this. The Waymo Open Dataset will help researchers better deal with these types of unexpected behaviors.

The dataset contains more than 570 hours of unique data, which the company believes it's the largest dataset of interactive behaviors ever released for autonomous driving research.

Waymo said its Motion Dataset data includes object trajectories and corresponding 3D maps for over 100,000 segments, each of which are 20 seconds long and mined specifically to include interesting interactions that other autonomous vehicle developers may have not experienced firsthand in the real world.

Waymo said it purposefully tried to make this data as useful as possible for others, in order to help third parties develop better motion forecasting models.

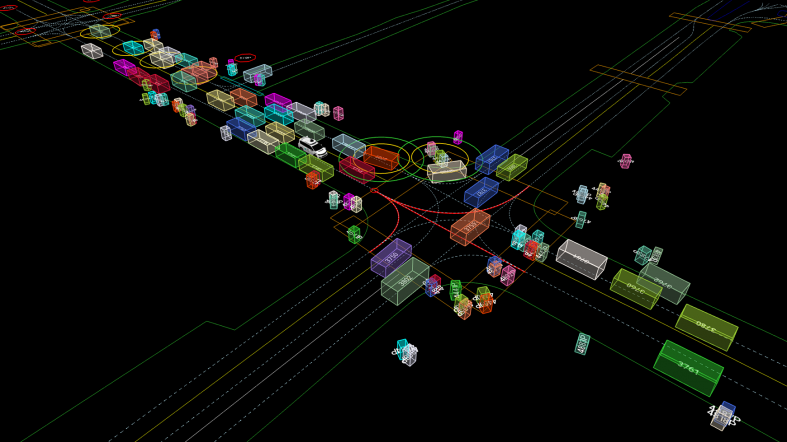

Waymo's software is able to accurately detect and observe dozens of other road users while navigating in San Francisco (Photo: Waymo)

The Waymo Open Dataset, features a wide variety of road types and driving conditions captured in the daytime and at night, as well as in different urban environments. It includes data collected in San Francisco, Phoenix, Mountain View, CA, Los Angeles, Detroit and Seattle, to help developers create motion forecasting models for varied driving environments.

Waymo made sure that its new dataset includes interesting examples of how its self-driving vehicles interact with other road users, such as cyclists and vehicles sharing the roadway, cars quickly passing through a busy junction, or groups of pedestrians clustering on the sidewalk.

At 20 seconds long, each segment is sufficient enough to train machine learning models to capture complex behaviors.

The segments were collected using high-resolution lidar and front-and-side-facing HD cameras from Waymo's self-drving fleet. Waymo also included map information for each scene to provide enough semantic context that's vital for making more accurate predictions.

In addition, Waymo says it uses a state-of-the-art offboard perception system to create higher-quality perception (bounding) boxes which are often not found in other datasets.

For developers of autonomous vehicles, bounding boxes are drawn around all the objects in each segment using an annotator. This includes other vehicles, pedestrians or anything over relevant information that developers want to include They are then labeled so a computer can identify what each object is.

Self-driving vehicle perception systems rely heavily on these bounding boxes to identify objects in their surroundings. This method of annotating objects helps train machine learning algorithms to be able to tell the difference between a tree, pedestrian, traffic light, bicyclist or another vehicle.

Annotating all of the objects in each scene makes it possible for autonomous vehicles to maneuver busy urban areas safely, as the vehicle is made aware of all of the moving objects in its immediate environment.

Wamyo says this process is important, as the better the perception system is at tracking the objects, the more accurate the resulting behavior prediction model will be at predicting what they are going to do. For example, predicting that a pedestrian may suddenly dart across the street at a green light.

By sharing its offboard perception techniques, Waymo makes it easier for researchers

to scrutinize the quality of the data its releasing. But it also provides researchers and developers with the recipe to create their own high-quality motion datasets from sensor logs capturing the scene, too.

Waymo released its Open Dataset in Aug 2019. At the time, the data was collected by a fleet of Waymo self-driving vehicles that traveled over 10 million miles in 25 different cities.

This data is an invaluable tool for developers working on autonomous driving. Waymo's own engineers use the dataset to develop self-driving technology and innovative machine learning models and algorithms.

With the release of Waymo's newest motion dataset, engineers outside of Waymo are getting access to the same data the Waymo's uses for the first time ever.

Waymo believes that its Open Dataset will help speed up the development of self-driving technology by sharing data and thereby promoting collaboration among developers, even if they are outside of the company.

"The more smart brains you can get working on the problem, whether inside or outside the company, the better," said Waymo Distinguished Research Scientist Drago Anguelov in 2019.

The Waymo Open Dataset helps researchers outside of Waymo make advances in perception, as well as improve behavior prediction, which is especially useful for navigating in dense urban areas where there are many pedestrians, vehicles and other road users that a self-driving vehicle has to deal with.

Waymo is not the only company sharing self-driving vehicle data to aid developers and researchers. In China, tech giant Baidu Inc. released its "Apollo Scape" dataset in March 2018, which was billed at the time as the world's largest open-source dataset for autonomous driving technology.

The dataset is part of Baidu's Apollo open autonomous driving platform. The Apollo platform was designed to speed up the development of autonomous driving technology through collaboration with industry partners.

Like Waymo's Open Dataset, Apollo Scape can also be utilized for perception, as well as enabling autonomous vehicles to be trained in more complex urban driving environments, weather and traffic conditions.

Waymo also announced the next round of its "Open Dataset Challenges", which awards cash prizes to developers to encourage new research in perception and behavior prediction.

Interested parties can access Waymo's Open Dataset for free here.

-

Ford is Testing a New Robotic Charging Station to Assist Drivers of EVs With Disabilities

-

Ford Raises the Prices of the F-150 Lightning Electric Pickup Due to Rising Raw Material Costs

-

The BMW 7-Series to Feature HD Live Maps From HERE Technologies for Hands-Free Highway Driving in North America at Speeds up to 80 MPH

-

AutoX to Use the 'Eyeonic Vision Sensor' from California-based SiLC Technologies for its Robotaxi Fleet in China

-

LG Develops ‘Invisible’ Speaker Sound Technology That Could Revolutionize In-Vehicle Audio

-

Researchers at South Korea’s Chung-Ang University Develop a ‘Meta-Reinforcement’ Machine Learning Algorithm for Traffic Lights to Improve Vehicle Throughput

-

Zeekr’s New 009 Electric Passenger Van is the World’s First EV to Feature CATL’s Advanced ‘Qilin’ Battery With a Range of 510 Miles

-

Redwood Materials is Building an Electric Vehicle Battery Recycling Facility in South Carolina

- Hyundai to Launch Autonomous Ride-Hailing Service in South Korea

- Apple Delays its Long Rumored Electric ‘Apple Car’ Until 2026, According to Sources

- Ford Motor Co is Recalling 2.9 Million Vehicle That Could ‘Roll Away’ After the Transmission is Shifted Into Park

- NHTSA Upgrades Tesla's Autopilot Investigation to 'Engineering Analysis'

- General Motors Announces Two Major Long-Term Supply Agreements for Enough Lithium and Cathode Material to Build 5 Million EVs

- SiLC Technologies Launches its Eyeonic Vision System, a LiDAR Sensor That Can Identify Objects up to 1 Kilometer Away

- Tesla May Build its Next Factory in South Korea, According to the Country’s Presidential Office

- GM's New 'Plug And Charge' Feature Will Simplify the Charging Process For its Current & Future EVs

- Reservations for the Electric Fisker Ocean SUV Reach 50,000, The Company Shares New Details About its Second EV Being Built by Foxconn

- China's Tech Giant Baidu Plans to Rollout the World’s Largest Fully Autonomous Ride-Hailing Service by Next Year

About Us

About Us Contact Us

Contact Us Careers

Careers